Privacy Paradox

Although survey results show that the privacy of their personal data is an important issue for online users worldwide, most users rarely make an effort to protect this data actively and often even give it away voluntarily. Privacy researchers have made several attempts to explain this dichotomy between privacy attitude and behavior, usually referred to as ‘privacy paradox’. While they proposed different theoretical explanations for the privacy paradox, as well as empirical study results concerning the relationship of individual factors on privacy behavior and attitude, no comprehensive explanation for the privacy paradox has been found so far. We aim to shed light on the privacy paradox phenomenon by summarizing the most popular theoretical privacy paradox explanations and identifying the factors that are most relevant for the prediction of privacy attitude and behavior. Since many studies focus on the behavioral intention instead of the actual behavior, we decided to consider this topic as well.

Research method

Based on a literature review, we identify all factors that significantly predict one of the three privacy aspects and report the corresponding standardized effect sizes (β). The results provide strong evidence for the theoretical explanation approach called ‘privacy calculus’, with possibly gained benefits being among the best predictors for disclosing intention as well as actual disclosure. Other strong predictors for privacy behavior are privacy intention, willingness to disclose, privacy concerns and privacy attitude. Demographic variables play a minor role, only gender was found to weakly predict privacy behavior. Privacy attitude was best predicted by internal variables like trust towards the website, privacy concerns or computer anxiety. Despite the multiplicity of survey studies dealing with user privacy, it is not easy to draw overall conclusions, because authors often refer to slightly different constructs. We suggest the privacy research community to agree on a shared definition of the different privacy constructs to allow for conclusions beyond individual samples and study designs.

We try to give an overview about this topic on this website. Further information can be found at Explaining the privacy paradox: A systematic review of literature investigating privacy attitude and behavior: Nina Gerber, Paul Gerber, Melanie Volkamer. Computers & Security, Volume 77, August 2018, Pages 226-261.

The following section describes the most popular explanations for the privacy paradox that have been proposed so far. A prior review of possible explanation approaches can be found in Kokolakis (2017).

Privacy Calculus

One of the most-established explanations for the privacy paradox is based on the theoretical concept of the ‘homo oeconomicus’. In the economic sciences, the term ‘homo oeconomicus’ refers to the prototype of an economic human, a consumer whose decisions and actions are all driven by the attempt to maximize his/her benefits (Rittenberg and Trigarthen, 2012; Flender and Müller, 2012). If this concept is applied to the privacy context, a user is expected to trade the benefits that could be earned by data disclosure off against the costs that could arise from revealing his/her data (Lee and Kwon, 2015). Typical benefits of sharing personal data include financial discounts (e.g., by participating in consumer loyalty programs), increased convenience (e.g., by keeping credit card data stored with an online retailer) or improvement of socialization (e.g., by using social networks and messengers) (Wang et al., 2015; Wilson and Valacich, 2012). Data sharing costs, on the other hand, are less tangible and include all sorts of risk and negative consequences for disclosing personal data (e.g., security impairments, identity theft, unintended third-party usage, or social criticism and humiliation) (Warshaw et al., 2015). According to the privacy calculus model, if the anticipated benefits of data sharing exceed the costs, a user is expected to willingly give his/her data away (Lee and Kwon, 2015). Nevertheless, s/he can still express concerns about the loss of his/her data, leading to the observed discrepancy between the expressed concerns or attitude and the actual behavior.

Bounded Rationality & Decision Biases

The recently described privacy calculus model postulates the existence of a rational user, who performs reasoned trade-off analyses for the decision to share (or protect) his/her data. However, numerous studies on consumer decision behavior have shown that the decision making process is affected by various cognitive biases and heuristics (Acquisti and Grossklags, 2007; Knijnenburg et al., 2013). For example, it is unlikely that every consumer accesses exhaustive information concerning all possible costs and benefits when making a data sharing decision (on the contrary, consumers are often not even aware that their data is being collected (Wakefield, 2013)). Hence, their decision is based on incomplete information, which can lead to the over- or underestimation of the costs and benefits and might therefore seem irrational to an external observer, but at the same time fairly rational to the decision maker (Flender and Müller, 2012). Furthermore, the human ability for cognitive processing is limited to a certain degree, which means even if a consumer has access to all necessary information, s/he might lack the ability to process all this information correctly and make an informed decision (Deuker, 2011). In the literature, this effect is often referred to as bounded rationality (Flender and Müller, 2012; Knijnenburg et al., 2013 ). The resulting imperfect decisions often suffer from cognitive biases, because the decision maker employs certain heuristics to compensate for his/her bounded rationality (Kokolakis, 2017; Symantec 2015; Wakefield, 2013; Zafeiropoulou et al., 2013; Tversky and Kahneman, 1974). Hence, the resulting behavior might not reflect the original intention or the expressed attitude towards that behavior. Popular examples for these cognitive biases are:

- The availability bias: People tend to overestimate the probability of events they can easily recall, e.g., because they are very present in the media (Schwarz et al., 1991).

- The optimism bias: People tend to believe that they are at less risk of experiencing a negative privacy event compared to others (Cho et al., 2010).

- The confirmation bias: People tend to search for or interpret information in a way that confirms their beliefs and assumptions (Plous, 1993).

- The affect bias: People judge quickly based on their affective impressions, thereby underestimating the risks of things they like and overestimating the risks of things they dislike (Slovic et al., 2002).

- The immediate gratification bias, sometimes also referred to as hyperbolic discounting: People tend to value present benefits or risks more than those that lie in the future (Acquisti and Grossklags, 2003).

- The valence effect: People tend to overestimate the likelihood of favorable events (Gold and Brown, 2009).

- The framing effect: People respond differently dependent on the way a question is framed or information is presented (Tversky and Kahneman, 1981).

- The phenomenon of rational ignorance: People ignore the potential costs of data sharing because the costs for learning them, e.g., by reading the privacy policies, would be higher than the expected benefits from sharing the data (Downs, 1957).

Lack of Personal Experience and Protection Knowledge

Another explanation accounts for the fact that few users have actually suffered from online privacy invasions. As a consequence, most privacy attitudes are based on heuristics or secondhand experiences. However, only personal experiences can form an attitude that is stable enough to significantly influence the corresponding behavior (Dienlin and Trepte, 2015). In addition to the resulting weak association between attitude and behavior, some users might simply lack the ability to protect their data, because they have no or only limited knowledge of technical solutions like the deletion of cookies, the encryption of e-mails or the anonymization of communication data, e.g., by using the Tor software (Baek, 2014).

Social Influence

Most people are not autonomous in their decision to accept or reject the usage of a messaging application, a social network or e-mail encryption software, respectively. It is rather assumed that the social environment of an individual significantly influences his/her privacy decisions and behavior (Taddicken, 2014). Especially in collectivistic cultures, where individuals possess a strong ‘we’ consciousness, do users obey to social norms (Beldad and Citra Kusumadewi, 2015). But social influence does also occur in individualistic cultures, for instance when teenagers align to the example of their parents when it comes to data sharing in social networks (Van Gool et al., 2015). In both kinds of culture include individuals usually at least to some extend the (supposed) opinion and behavior of their peers and/or family in their decision to use a specific technology or reveal their data. If significant others tend to self-disclose personal information, e.g., on social networks, some kind of social pressure can occur, eventually build on an idea of reciprocity, i.e., ‘if they disclose data it would be unfair not to do the same’ (Flender and Müller, 2012). Sometimes, the decision not to share personal data can even become a social stigma, for anyone who refuses to disclose his/her habits, actions and attitudes ‘must have something terrible to hide’ (Hull, 2015). Hence, actual behavior is most likely affected by social factors, whereas the expressed attitude supposedly reflects the unbiased opinion of the respective individual.

The Risk and Trust Model

It is most likely that the perceived risk of data-disclosing, as well as the trustworthiness of the recipient affects the data sharing attitude and behavior of an individual. Some authors explain the privacy paradox by assuming that trust has a direct influence on privacy behavior, whereas the perceived risk influences the reported attitude and behavioral intention. Still this influence is not strong enough to affect the actual behavior (Norberg et al., 2007). Trust, which is an environmental factor, has a stronger effect in concrete decision situations (i.e., behavior). The perceived risk, on the other hand, dominates in abstract decision situations, for example when a user is asked if s/he would be willing to share his/her data in a hypothetical situation (Flender and Müller, 2012), thereby producing the dichotomy between the reported attitude and the actual behavior.

Quantum Theory

Relying on quantum theory, Flender and Müller (2012) propose another explanation for the privacy paradox. If human decision-making underlies the same effects as the measurement process in quantum experiments, we can assume that the outcome of a decision process is not determined until the actual decision is made (Kokolakis, 2017) and two decisions are not interchangeable in terms of decision making (Flender and Müller, 2012). Hence, if an individual is asked about a potential decision outcome prior to actually making the decision (i.e., attitude rather than behavior is assessed), his/her answer does not necessarily reflect the actual decision outcome.

Illusion of Control

In a series of studies, Brandimarte et al. (2013) dealt with the hypothesis that users suffer from an ‘illusion of control’ when dealing with the privacy of their data. They found that users indeed seem to confuse the control over the publication of information with the control over the assessment of that information by third parties. Therefore, users are more likely to allow the publication of personal information and even provide more sensitive information, if they are given explicit control over the publication of their data. If, on the other hand, a third party is responsible for the publication of the same data, users may perceive a loss of control and express concerns about the usage of their data by others without authorization (Brandimarte et al., 2009). According to this hypothesis, the paradoxical behavior is caused by the false feeling of control over the further usage of personal data, which occurs if users can initially decide over the publication of it (e.g., by posting in social networks and managing the privacy settings for the post).

The Privacy Paradox as Methodological Artefact

Another potential reason for the dichotomy between behavior and attitude is based on methodological considerations. One explanation may be the inappropriate operationalization of these constructs in the particular studies dealing with the privacy paradox (Dienlin and Trepte, 2015). Behavior is often assessed as a dichotomous answer (for example by asking if someone has a public Facebook profile or not), whereas attitude is measured on a metric (e.g., a Likert-based) scale. However, dichotomous data always implies a potential limitation of variance, which can in turn lead to a reduction of statistical power. Hence, it is possible that in fact there is a strong relationship between attitude and behavior and previous studies just failed to verify this relationship due to their inappropriate operationalization.

Another approach is based on the assumption of a multi-dimensional nature of privacy. Dienlin and Trepte (2015) suggest that it is important to distinguish between privacy attitudes and privacy concerns on the one hand, and between informational, social and psychological privacy on the other hand. Indeed, a corresponding study by Dienlin and Trepte (2015), which accounts for these different facets of privacy revealed an indirect effect of privacy concerns on privacy behavior. Specifically, privacy concerns had an effect on privacy attitudes, which in turn influenced privacy intentions, which finally influenced privacy behavior.

So far, no definite explanation for the privacy paradox has been proposed. However, considering the variety of possible explanations for the privacy paradox, either interpreting the phenomenon or developing extensive models to shed light on it, the dichotomy between privacy attitudes, concerns or perceived risk and privacy behavior should not be perceived as paradox anymore. To further understand which factors relate to user privacy, we report the standardized effect size (β) that could be found in the included studies concerning the association of the different predictor variables with privacy attitude, privacy concern, perceived privacy risk, privacy intention and privacy behavior in the paper.

References

Acquisti A ,Grossklags J . Losses, gains, and hyperbolic discounting: an experimental approach to information security attitudes and behavior. Proceedings of the second annual workshop on economics and information security (WEIS 2003), 2003

Acquisti A ,Grossklags J . What can behavioral economics teach us about privacy?. In: Acquisti A, Gritzalis S, Lambrinoudakis C, di Vimercati S, editors. Digital privacy: theory, technology, and practices. Boca Raton: Auerbach Publications; 2007. p. 363–77

Baek YM. Solving the privacy paradox: a counter-argument experimental approach. Comput Hum Behav 2014;38:33–42. doi: 10.1016/j.chb.2014.05.006

Beldad A, Citra Kusumadewi M. Here’s my location, for your information: the impact of trust, benefits, and social influence on location sharing application use among Indonesian university students. Comput Hum Behav 2015;49:102–10. doi: 10.1016/j.chb.2015.02.047

Brandimarte, L., Acquisti, A., Loewenstein, G. (2009). Privacy concerns and information disclosure: an illusion of control hypothesis. In: Proceedings of the poster iConference

Brandimarte L, Acquisti A, Loewenstein G. Misplaced confidences: privacy and the control paradox. Soc Psychol Personal Sci 2013;4(3):340–7. doi: 10.1177/1948550612455931

Cho H ,Lee JS ,Chung S . Optimistic bias about online privacy risks: testing the moderating effects of perceived controllability and prior experience. Comput Hum Behav 2010;26(5):987–95

Dienlin T, Trepte S. Is the privacy paradox a relic of the past? An in-depth analysis of privacy attitudes and privacy behaviors. Eur J Soc Psychol 2015;45(3):285–97. doi: 10.1002/ejsp.2049

Downs A . An economic theory of democracy. New York: Harper & Brothers; 1957

Flender C ,Müller G . Type indeterminacy in privacy decisions: the privacy paradox revisited. In: Busemeyer JR, Dubois F, Lambert-Mogiliansky A, Melucci M, editors. Quantum interaction. Berlin Heidelberg: Springer; 2012. p. 148–59

Gold RS, Brown MG. Explaining the effect of event valence on unrealistic optimism. Psychol Health Med 2009;14(3):262–72. doi: 10.1080/13548500802241910

Hull G. Successful failure: what Foucault can teach us about privacy self-management in a world of Facebook and big data. Ethics Inf Technol 2015;17(2):89–101. doi: 10.1007/s10676-015-9363-z

Knijnenburg BP, Kobsa A, Jin H. Dimensionality of information disclosure behavior. Int J Hum Comput Stud 2013;71(12):1144–62. doi: 10.1016/j.ijhcs.2013.06.003

Kokolakis S. Privacy attitudes and privacy behaviour: a review of current research on the privacy paradox phenomenon. Comput Secur 2017;64:122–34. doi: 10.1016/j.cose.2015.07.002

Lee N, Kwon O. A privacy-aware feature selection method for solving the personalization-privacy paradox in mobile wellness healthcare services. Expert Syst Appl 2015;42(5):2764–71. doi: 10.1016/j.eswa.2014.11.031 .

Norberg PA, Horne DR, Horne DA. The privacy paradox: personal information disclosure intentions versus behaviors. J Consum Affairs 2007;41(1):100–26. doi: 10.1111/j.1745-6606.2006.00070.x

Plous S . The psychology of judgment and decision making. New York: McGraw-Hill Inc; 1993

Rittenberg L ,Trigarthen T . Principles of microeconomics. Washington, DC: Flat World Knowledge, Inc.; 2012

Schwarz N ,Bless H ,Strack F ,Klumpp G ,Rittenauer-Schatka H ,Simons A . Ease of retrieval as information: another look at the availability heuristic. J Personal Social Psychol 1991;61:195–202

Slovic P ,Finucane M ,Peters E ,MacGregor GD . The affect heuristic. In: Gilovich T, Griffin WD, Kahneman D, editors. Heuristics and biases. Cambridge University Press; 2002. p. 397–420

Symantec (2015). State of privacy report 2015 . Symantec. http://www.symantec.com/content/en/us/about/presskits/ b- state- of- privacy-report-2015.pdf/ Accessed01March 2017

Taddicken M. The “privacy paradox”in the social web: the impact of privacy concerns, individual characteristics, and the perceived social relevance on different forms of self-disclosure. J Comput Mediat Commun 2014;19(2):248–73. doi: 10.1111/jcc4.12052

Tversky A ,Kahneman D . Judgement under Uncertainty: Heuristics and Biases. Science 1974;185(4157):1124–31

Tversky A, Kahneman D. The framing of decisions and the psychology of choice. Science 1981;211(4481):453–8. doi: 10.1126/science.7455683

Van Gool E, Van Ouytsel J, Ponnet K, Walrave M. To share or not to share? Adolescents’ self-disclosure about peer relationships on facebook: an application of the prototype willingness model. Comput Hum Behav 2015;44:230–9. doi: 10.1016/j.chb.2014.11.036

Wakefield R. The influence of user affect in online information disclosure. J Strateg Inf Syst 2013;22(2):157–74. doi: 10.1016/j.jsis.2013.01.003

Wang N, Zhang B, Liu B, Jin H. Investigating effects of control and ads awareness on android users’ privacy behaviors and perceptions. Proceedings of the seventeenth international conference on human–computer interaction with mobile devices and services; 2015. p. 373–82

Warshaw J, Matthews T, Whittaker S, Kau C, Bengualid M, Smith BA. Can an algorithm know the “real you”? Understanding people’s reactions to hyper-personal analytics systems. Proceedings of the thirty-third annual ACM conference on human factors in computing systems; 2015. p. 797–806

Wilson D ,Valacich J . Unpacking the privacy paradox: irrational decision-making within the privacy calculus. Proceedings of the thirty-third international conference on information systems; 2012. p. 4152–62 .Wisniewski P, Jia H, Xu H, Rosson

Zafeiropoulou AM, Millard DE, Webber C, O’Hara K. Unpicking the privacy paradox: can structuration theory help to explain location-based privacy decisions . Proceedings of the ACM Web Science Conference; 2013. p. 463–72

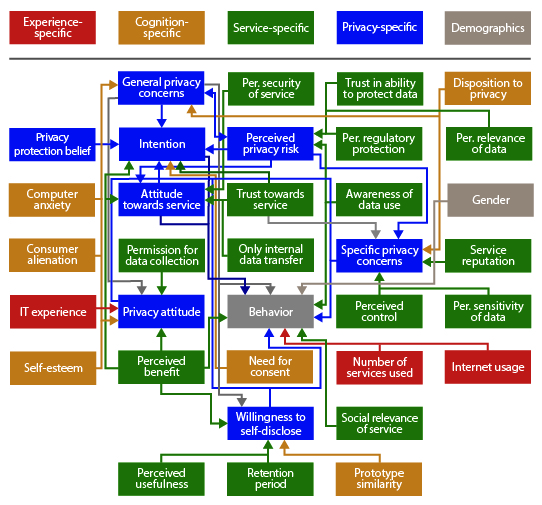

Model

The following graph describes the relationships between variables that affect privacy attitude, intention, and behavior.